TextureViewSource

//TextureView

private TextureLayer mLayer;

private SurfaceTexture mSurface;

private SurfaceTextureListener mListener;

public void setSurfaceTextureListener(SurfaceTextureListener listener) {

mListener = listener;

}

draw

@Override

public final void draw(Canvas canvas) {

mPrivateFlags = (mPrivateFlags & ~PFLAG_DIRTY_MASK) | PFLAG_DRAWN;

/* Simplify drawing to guarantee the layer is the only thing drawn - so e.g. no background,

scrolling, or fading edges. This guarantees all drawing is in the layer, so drawing

properties (alpha, layer paint) affect all of the content of a TextureView. */

if (canvas.isHardwareAccelerated()) {

DisplayListCanvas displayListCanvas = (DisplayListCanvas) canvas;

TextureLayer layer = getTextureLayer();//main

if (layer != null) {

applyUpdate();

applyTransformMatrix();

mLayer.setLayerPaint(mLayerPaint); // ensure layer paint is up to date

displayListCanvas.drawTextureLayer(layer);

}

}

}

创建或更新TextureLayer

TextureLayer getTextureLayer() {

if (mLayer == null) {

if (mAttachInfo == null || mAttachInfo.mThreadedRenderer == null) {

return null;

}

mLayer = mAttachInfo.mThreadedRenderer.createTextureLayer();//main1

boolean createNewSurface = (mSurface == null);

if (createNewSurface) {

// Create a new SurfaceTexture for the layer.

mSurface = new SurfaceTexture(false);//main2

nCreateNativeWindow(mSurface);//main2

}

mLayer.setSurfaceTexture(mSurface);//main2

mSurface.setDefaultBufferSize(getWidth(), getHeight());

mSurface.setOnFrameAvailableListener(mUpdateListener, mAttachInfo.mHandler);//main3

if (mListener != null && createNewSurface) {

mListener.onSurfaceTextureAvailable(mSurface, getWidth(), getHeight());//main4

}

mLayer.setLayerPaint(mLayerPaint);

}

if (mUpdateSurface) {

// Someone has requested that we use a specific SurfaceTexture, so

// tell mLayer about it and set the SurfaceTexture to use the

// current view size.

mUpdateSurface = false;

// Since we are updating the layer, force an update to ensure its

// parameters are correct (width, height, transform, etc.)

updateLayer();//main5

mMatrixChanged = true;

mLayer.setSurfaceTexture(mSurface);//main5

mSurface.setDefaultBufferSize(getWidth(), getHeight());//main5

}

return mLayer;

}

createTextureLayer

nCreateTextureLayer

/**

* Creates a new hardware layer. A hardware layer built by calling this

* method will be treated as a texture layer, instead of as a render target.

*

* @return A hardware layer

*/

TextureLayer createTextureLayer() {

long layer = nCreateTextureLayer(mNativeProxy);

return TextureLayer.adoptTextureLayer(this, layer);

}

//frameworks/base/core/jni/android_view_ThreadedRenderer.cpp

static jlong android_view_ThreadedRenderer_createTextureLayer(JNIEnv* env, jobject clazz,

jlong proxyPtr) {

RenderProxy* proxy = reinterpret_cast<RenderProxy*>(proxyPtr);

DeferredLayerUpdater* layer = proxy->createTextureLayer();

return reinterpret_cast<jlong>(layer);

}

//frameworks/base/libs/hwui/renderthread/RenderProxy.cpp

DeferredLayerUpdater* RenderProxy::createTextureLayer() {

return mRenderThread.queue().runSync([this]() -> auto {

return mContext->createTextureLayer();

});

}

//frameworks/base/libs/hwui/renderthread/CanvasContext.cpp

DeferredLayerUpdater* CanvasContext::createTextureLayer() {

return mRenderPipeline->createTextureLayer();

}

CanvasContext* CanvasContext::create(RenderThread& thread, bool translucent,

RenderNode* rootRenderNode, IContextFactory* contextFactory) {

auto renderType = Properties::getRenderPipelineType();

switch (renderType) {

case RenderPipelineType::OpenGL:

return new CanvasContext(thread, translucent, rootRenderNode, contextFactory,

std::make_unique<OpenGLPipeline>(thread));

case RenderPipelineType::SkiaGL:

return new CanvasContext(thread, translucent, rootRenderNode, contextFactory,

std::make_unique<skiapipeline::SkiaOpenGLPipeline>(thread));

case RenderPipelineType::SkiaVulkan:

return new CanvasContext(thread, translucent, rootRenderNode, contextFactory,

std::make_unique<skiapipeline::SkiaVulkanPipeline>(thread));

default:

LOG_ALWAYS_FATAL("canvas context type %d not supported", (int32_t)renderType);

break;

}

return nullptr;

}

这里面是指Skia兼容的OpenGL模式。vulkan是当前性能更优,框架比起OpenGL es更加小巧的方案。当然Android P默认是使用SkiaOpenGLPipeline,而Android O是OpenGLPipeline。我们来讨论这一种。现在我们在讨论Android 9.0,理应解析SkiaOpenGLPipeline。当然从源码的角度来看Android P和O两者创建TextureLayer的逻辑上是一致的。

//frameworks/base/libs/hwui/pipeline/skia/SkiaOpenGLPipeline.cpp

DeferredLayerUpdater* SkiaOpenGLPipeline::createTextureLayer() {

mEglManager.initialize();

return new DeferredLayerUpdater(mRenderThread.renderState(), createLayer, Layer::Api::OpenGL);

}

static Layer* createLayer(RenderState& renderState, uint32_t layerWidth, uint32_t layerHeight,

sk_sp<SkColorFilter> colorFilter, int alpha, SkBlendMode mode, bool blend) {

GlLayer* layer =

new GlLayer(renderState, layerWidth, layerHeight, colorFilter, alpha, mode, blend);

layer->generateTexture();

return layer;

}

TextureLayer.adoptTextureLayer

static TextureLayer adoptTextureLayer(ThreadedRenderer renderer, long layer) {

return new TextureLayer(renderer, layer);

}

new SurfaceTexture

public SurfaceTexture(boolean singleBufferMode) {

mCreatorLooper = Looper.myLooper();

mIsSingleBuffered = singleBufferMode;

nativeInit(true, 0, singleBufferMode, new WeakReference<SurfaceTexture>(this));

}

new GLConsumer

//frameworks/base/core/jni/android/graphics/SurfaceTexture.cpp

static void SurfaceTexture_init(JNIEnv* env, jobject thiz, jboolean isDetached,

jint texName, jboolean singleBufferMode, jobject weakThiz)

{

sp<IGraphicBufferProducer> producer;

sp<IGraphicBufferConsumer> consumer;

BufferQueue::createBufferQueue(&producer, &consumer);

sp<GLConsumer> surfaceTexture;

if (isDetached) {

surfaceTexture = new GLConsumer(consumer, GL_TEXTURE_EXTERNAL_OES,

true, !singleBufferMode);

} else {

surfaceTexture = new GLConsumer(consumer, texName,

GL_TEXTURE_EXTERNAL_OES, true, !singleBufferMode);

}

SurfaceTexture_setSurfaceTexture(env, thiz, surfaceTexture);

SurfaceTexture_setProducer(env, thiz, producer);

jclass clazz = env->GetObjectClass(thiz);

if (clazz == NULL) {

jniThrowRuntimeException(env,

"Can't find android/graphics/SurfaceTexture");

return;

}

sp<JNISurfaceTextureContext> ctx(new JNISurfaceTextureContext(env, weakThiz,clazz));

surfaceTexture->setFrameAvailableListener(ctx);

SurfaceTexture_setFrameAvailableListener(env, thiz, ctx);//GLConsumer本质上也是一个图元消费者。当Surface调用了queueBuffer之后,将会调用setFrameAvailableListener注册的监听。

}

当然在初始化GLConsumer过程中,分为2种方式一种是detach一种是非detach。这两个有什么区别呢?最主要的区别就是可以设置texName纹理id。因为OpenGL es的纹理是跟着线程的OpenGL的上下文走的。因此,在TextureView在不同线程渲染同一个SurfaceTexture,需要进行一次detach,重新绑定一次当前线程新的纹理。

static void SurfaceTexture_setFrameAvailableListener(JNIEnv* env,

jobject thiz, sp<GLConsumer::FrameAvailableListener> listener)

{

GLConsumer::FrameAvailableListener* const p =

(GLConsumer::FrameAvailableListener*)

env->GetLongField(thiz, fields.frameAvailableListener);

if (listener.get()) {

listener->incStrong((void*)SurfaceTexture_setSurfaceTexture);

}

if (p) {

p->decStrong((void*)SurfaceTexture_setSurfaceTexture);

}

env->SetLongField(thiz, fields.frameAvailableListener, (jlong)listener.get());

}

每当有图元通过queueBuffer把图元传递进来则会调用如下方法:

void JNISurfaceTextureContext::onFrameAvailable(const BufferItem& /* item */)

{

bool needsDetach = false;

JNIEnv* env = getJNIEnv(&needsDetach);

if (env != NULL) {

env->CallStaticVoidMethod(mClazz, fields.postEvent, mWeakThiz);

} else {

ALOGW("onFrameAvailable event will not posted");

}

if (needsDetach) {

detachJNI();

}

}

这个方法本质上是反射调用SurfaceTexture中的postEventFromNative方法:

private static void postEventFromNative(WeakReference<SurfaceTexture> weakSelf) {

SurfaceTexture st = weakSelf.get();

if (st != null) {

Handler handler = st.mOnFrameAvailableHandler;

if (handler != null) {

handler.sendEmptyMessage(0);

}

}

}

nCreateNativeWindow(mSurface)

//frameworks/base/core/jni/android_view_TextureView.cpp

static void android_view_TextureView_createNativeWindow(JNIEnv* env, jobject textureView,

jobject surface) {

sp<IGraphicBufferProducer> producer(SurfaceTexture_getProducer(env, surface));

sp<ANativeWindow> window = new Surface(producer, true);

window->incStrong((void*)android_view_TextureView_createNativeWindow);

SET_LONG(textureView, gTextureViewClassInfo.nativeWindow, jlong(window.get()));

}

sp<IGraphicBufferProducer> SurfaceTexture_getProducer(JNIEnv* env, jobject thiz) {

return (IGraphicBufferProducer*)env->GetLongField(thiz, fields.producer);

}

把SurfaceTexture的GraphicBufferProducer设置到Surface中,之后这个Surface可以利用它进行图元出队和入队的操作

mSurface.setOnFrameAvailableListener

private final SurfaceTexture.OnFrameAvailableListener mUpdateListener =

new SurfaceTexture.OnFrameAvailableListener() {

@Override

public void onFrameAvailable(SurfaceTexture surfaceTexture) {

updateLayer();

invalidate();

}

};

private void updateLayer() {

synchronized (mLock) {

mUpdateLayer = true;

}

}

绘制

通过draw方法的getTextureLayer方法初始化好TextureView的绘制环境。接着就会执行下面的方法

if (layer != null) {

applyUpdate();//1 TextureLayer更新准备

applyTransformMatrix();//2

mLayer.setLayerPaint(mLayerPaint); // ensure layer paint is up to date 3

displayListCanvas.drawTextureLayer(layer);//4

}

applyUpdate

private void applyUpdate() {

if (mLayer == null) {

return;

}

synchronized (mLock) {

if (mUpdateLayer) {

mUpdateLayer = false;

} else {

return;

}

}

mLayer.prepare(getWidth(), getHeight(), mOpaque);

mLayer.updateSurfaceTexture();

if (mListener != null) {

mListener.onSurfaceTextureUpdated(mSurface);

}

}

TextureLayer.prepare

public boolean prepare(int width, int height, boolean isOpaque) {

return nPrepare(mFinalizer.get(), width, height, isOpaque);

}

android_view_TextureLayer.cpp

static jboolean TextureLayer_prepare(JNIEnv* env, jobject clazz,

jlong layerUpdaterPtr, jint width, jint height, jboolean isOpaque) {

DeferredLayerUpdater* layer = reinterpret_cast<DeferredLayerUpdater*>(layerUpdaterPtr);

bool changed = false;

changed |= layer->setSize(width, height);

changed |= layer->setBlend(!isOpaque);

return changed;

}

可以看到prepare其实就是给DeferredLayerUpdater设置是否开启透明以及DeferredLayerUpdater的绘制范围。换句话说,就是TextureView绘制的宽高。保存到DeferredLayerUpdater中。

TextureLayer.updateSurfaceTexture

android_view_TextureLayer.cpp

static void TextureLayer_updateSurfaceTexture(JNIEnv* env, jobject clazz,

jlong layerUpdaterPtr) {

DeferredLayerUpdater* layer = reinterpret_cast<DeferredLayerUpdater*>(layerUpdaterPtr);

layer->updateTexImage();

}

此时将会调用DeferredLayerUpdater的updateTexImage。打开了刷新的标志位。

applyTransformMatrix

private void applyTransformMatrix() {

if (mMatrixChanged && mLayer != null) {

mLayer.setTransform(mMatrix);

mMatrixChanged = false;

}

}

//frameworks/base/core/java/android/view/TextureLayer.java

public void setTransform(Matrix matrix) {

nSetTransform(mFinalizer.get(), matrix.native_instance);

mRenderer.pushLayerUpdate(this);

}

nSetTransform

nSetTransform把Matrix保存在DeferredLayerUpdater。

mRenderer.pushLayerUpdate

是核心,调用ThreadRenderer的pushLayerUpdate,把TextureLayer压入栈中。

//frameworks/base/core/jni/android_view_ThreadedRenderer.cpp

static void android_view_ThreadedRenderer_pushLayerUpdate(JNIEnv* env, jobject clazz,

jlong proxyPtr, jlong layerPtr) {

RenderProxy* proxy = reinterpret_cast<RenderProxy*>(proxyPtr);

DeferredLayerUpdater* layer = reinterpret_cast<DeferredLayerUpdater*>(layerPtr);

proxy->pushLayerUpdate(layer);

}

//frameworks/base/libs/hwui/renderthread/RenderProxy.cpp

void RenderProxy::pushLayerUpdate(DeferredLayerUpdater* layer) {

mDrawFrameTask.pushLayerUpdate(layer);

}

在这里就把DeferredLayerUpdater保存到DrawFrameTask中,等待ViewRootImpl后续流程统一把DrawFrameTask中保存的内容进行绘制。

//frameworks/base/libs/hwui/renderthread/DrawFrameTask.cpp

/*********************************************

* Single frame data

*********************************************/

std::vector<sp<DeferredLayerUpdater> > mLayers;

void DrawFrameTask::pushLayerUpdate(DeferredLayerUpdater* layer) {

for (size_t i = 0; i < mLayers.size(); i++) {

if (mLayers[i].get() == layer) {

return;

}

}

mLayers.push_back(layer);

}

DeferredLayerUpdater将会把没有保存过的layer保存到mLayers这个集合当中。

TextureLayer.setLayerPaint

static void TextureLayer_setLayerPaint(JNIEnv* env, jobject clazz,

jlong layerUpdaterPtr, jlong paintPtr) {

DeferredLayerUpdater* layer = reinterpret_cast<DeferredLayerUpdater*>(layerUpdaterPtr);

if (layer) {

Paint* paint = reinterpret_cast<Paint*>(paintPtr);

layer->setPaint(paint);

}

}

DisplayListCanvas.drawTextureLayer

//frameworks/base/core/java/android/view/DisplayListCanvas.java

void drawTextureLayer(TextureLayer layer) {

nDrawTextureLayer(mNativeCanvasWrapper, layer.getLayerHandle());

}

//frameworks/base/core/jni/android_view_DisplayListCanvas.cpp

static void android_view_DisplayListCanvas_drawTextureLayer(jlong canvasPtr, jlong layerPtr) {

Canvas* canvas = reinterpret_cast<Canvas*>(canvasPtr);

DeferredLayerUpdater* layer = reinterpret_cast<DeferredLayerUpdater*>(layerPtr);

canvas->drawLayer(layer);

}

DisplayListCanvas对应在native层,也是根据pipe的类型生成对应不同的硬件渲染Canvas,在这里我们挑选默认的SkiaRecordingCanvas来聊聊。接下来就会调用SkiaRecordingCanvas的drawLayer方法。

SkiaRecordingCanvas.drawLayer

//frameworks/base/libs/hwui/pipeline/skia/SkiaRecordingCanvas.cpp

void SkiaRecordingCanvas::drawLayer(uirenderer::DeferredLayerUpdater* layerUpdater) {

if (layerUpdater != nullptr) {

// Create a ref-counted drawable, which is kept alive by sk_sp in SkLiteDL.

sk_sp<SkDrawable> drawable(new LayerDrawable(layerUpdater));

drawDrawable(drawable.get());

}

}

此时会使用一个智能指针包裹LayerDrawable。LayerDrawable则会持有DeferredLayerUpdater。drawDrawable绘制LayerDrawable中的内容。由于SkiaRecordingCanvas继承于SkiaCanvas。从上一篇文章可知,SkiaCanvas中真正在工作的是SkCanvas。我们直接看看SkCanvas的drawDrawable方法。

SkCanvas.drawDrawable

///external/skia/src/core/SkCanvas.cpp

void drawDrawable(SkDrawable* drawable, const SkMatrix* matrix = nullptr);

void SkCanvas::drawDrawable(SkDrawable* dr, const SkMatrix* matrix) {

#ifndef SK_BUILD_FOR_ANDROID_FRAMEWORK

TRACE_EVENT0("skia", TRACE_FUNC);

#endif

RETURN_ON_NULL(dr);

if (matrix && matrix->isIdentity()) {

matrix = nullptr;

}

this->onDrawDrawable(dr, matrix);

}

void SkCanvas::onDrawDrawable(SkDrawable* dr, const SkMatrix* matrix) {

// drawable bounds are no longer reliable (e.g. android displaylist)

// so don't use them for quick-reject

dr->draw(this, matrix);

}

SkDrawable.draw

///external/skia/src/core/SkDrawable.cpp

void SkDrawable::draw(SkCanvas* canvas, const SkMatrix* matrix) {

SkAutoCanvasRestore acr(canvas, true);

if (matrix) {

canvas->concat(*matrix);

}

this->onDraw(canvas);

....

}

在SkDrawable则会调用onDraw方法。onDraw是一个虚函数,在LayerDrawable中实现了。

LayerDrawable.onDraw

void LayerDrawable::onDraw(SkCanvas* canvas) {

Layer* layer = mLayerUpdater->backingLayer();

if (layer) {

DrawLayer(canvas->getGrContext(), canvas, layer);

}

}

会从DeferredLayerUpdater 获取Layer对象,而这个Layer对象就是通过DeferredLayerUpdater保存的函数指针生成的GLLayer。但是第一次刷新界面的时候,并没有诞生出一个GLLayer进行绘制。所以不会继续走。

那么到这里,我们似乎遇到了瓶颈了,究竟是在什么时候才会真正的生成GLLayer。

硬件加速绘制中的syncframestate方法就是把deferredlayerupdater转化为gllayer。

DrawFrameTask.syncFrameState

bool DrawFrameTask::syncFrameState(TreeInfo& info) {

ATRACE_CALL();

int64_t vsync = mFrameInfo[static_cast<int>(FrameInfoIndex::Vsync)];

mRenderThread->timeLord().vsyncReceived(vsync);

bool canDraw = mContext->makeCurrent();

mContext->unpinImages();

for (size_t i = 0; i < mLayers.size(); i++) {

mLayers[i]->apply();

}

mLayers.clear();

....

return info.prepareTextures;

}

能看到,把OpenGL es的上下文切换为当前线程之后,调用每一个Layer的apply进行处理,并且清空mLayers集合。

此时我们看看DeferredLayerUpdater的apply。

DeferredLayerUpdater.apply

///frameworks/base/libs/hwui/DeferredLayerUpdater.cpp

void DeferredLayerUpdater::apply() {

if (!mLayer) {

mLayer = mCreateLayerFn(mRenderState, mWidth, mHeight, mColorFilter, mAlpha, mMode, mBlend);

}

mLayer->setColorFilter(mColorFilter);

mLayer->setAlpha(mAlpha, mMode);

if (mSurfaceTexture.get()) {

if (mLayer->getApi() == Layer::Api::Vulkan) {

...

} else {

if (!mGLContextAttached) {

mGLContextAttached = true;

mUpdateTexImage = true;

mSurfaceTexture->attachToContext(static_cast<GlLayer*>(mLayer)->getTextureId());//main

}

if (mUpdateTexImage) {

mUpdateTexImage = false;

doUpdateTexImage();//main

}

GLenum renderTarget = mSurfaceTexture->getCurrentTextureTarget();

static_cast<GlLayer*>(mLayer)->setRenderTarget(renderTarget);//main

}

if (mTransform) {

mLayer->getTransform().load(*mTransform);//main

setTransform(nullptr);

}

}

}

能看到如果判断到mLayer为空,则调用之前保存下来的方法指针生成一个GlLayer。mSurfaceTexture在这里就是上面保存下来的GLConsumer。

- 1.调用GlLayer的attachToContext进行上下文切换和GlLayer中的纹理id进行绑定。

- 2.调用 doUpdateTexImage 更新纹理数据

- 3.设置GlLayer渲染的纹理为GLConsumer当前渲染的纹理。

- 4.GlLayer保存变换矩阵。

……………………………………….

LayerDrawable.DrawLayer

//frameworks/base/libs/hwui/pipeline/skia/LayerDrawable.cpp

bool LayerDrawable::DrawLayer(GrContext* context, SkCanvas* canvas, Layer* layer,

const SkRect* dstRect) {

...

// transform the matrix based on the layer

SkMatrix layerTransform;

layer->getTransform().copyTo(layerTransform);

sk_sp<SkImage> layerImage;

const int layerWidth = layer->getWidth();

const int layerHeight = layer->getHeight();

if (layer->getApi() == Layer::Api::OpenGL) {

GlLayer* glLayer = static_cast<GlLayer*>(layer);

GrGLTextureInfo externalTexture;

externalTexture.fTarget = glLayer->getRenderTarget();

externalTexture.fID = glLayer->getTextureId();

externalTexture.fFormat = GL_RGBA8;

GrBackendTexture backendTexture(layerWidth, layerHeight, GrMipMapped::kNo, externalTexture);

layerImage = SkImage::MakeFromTexture(context, backendTexture, kTopLeft_GrSurfaceOrigin,

kPremul_SkAlphaType, nullptr);

} else {

...

}

if (layerImage) {

SkMatrix textureMatrixInv;

layer->getTexTransform().copyTo(textureMatrixInv);

// TODO: after skia bug https://bugs.chromium.org/p/skia/issues/detail?id=7075 is fixed

// use bottom left origin and remove flipV and invert transformations.

SkMatrix flipV;

flipV.setAll(1, 0, 0, 0, -1, 1, 0, 0, 1);

textureMatrixInv.preConcat(flipV);

textureMatrixInv.preScale(1.0f / layerWidth, 1.0f / layerHeight);

textureMatrixInv.postScale(layerWidth, layerHeight);

SkMatrix textureMatrix;

if (!textureMatrixInv.invert(&textureMatrix)) {

textureMatrix = textureMatrixInv;

}

SkMatrix matrix = SkMatrix::Concat(layerTransform, textureMatrix);

SkPaint paint;

paint.setAlpha(layer->getAlpha());

paint.setBlendMode(layer->getMode());

paint.setColorFilter(layer->getColorSpaceWithFilter());

const bool nonIdentityMatrix = !matrix.isIdentity();

if (nonIdentityMatrix) {

canvas->save();

canvas->concat(matrix);

}

if (dstRect) {

SkMatrix matrixInv;

if (!matrix.invert(&matrixInv)) {

matrixInv = matrix;

}

SkRect srcRect = SkRect::MakeIWH(layerWidth, layerHeight);

matrixInv.mapRect(&srcRect);

SkRect skiaDestRect = *dstRect;

matrixInv.mapRect(&skiaDestRect);

canvas->drawImageRect(layerImage.get(), srcRect, skiaDestRect, &paint,

SkCanvas::kFast_SrcRectConstraint);

} else {

canvas->drawImage(layerImage.get(), 0, 0, &paint);

}

// restore the original matrix

if (nonIdentityMatrix) {

canvas->restore();

}

}

return layerImage;

}

接下来将执行如下步骤:

- 1.判断到是OpenGL类型的pipeline。接着通过GlLayer保存的纹理对象生成GrBackendTexture里面保存着当前TextureLayer的宽高(也就是TextureView在draw中applyUpdate的保存下来的TextureView的宽高)。最后通过GrBackendTexture生成一个SkImage对象。

- 2.根据变换矩阵处理SkImage

- 3.canvas 在一个区域内绘制SkImage中的像素。

到这里面就完成了TextureView的解析。

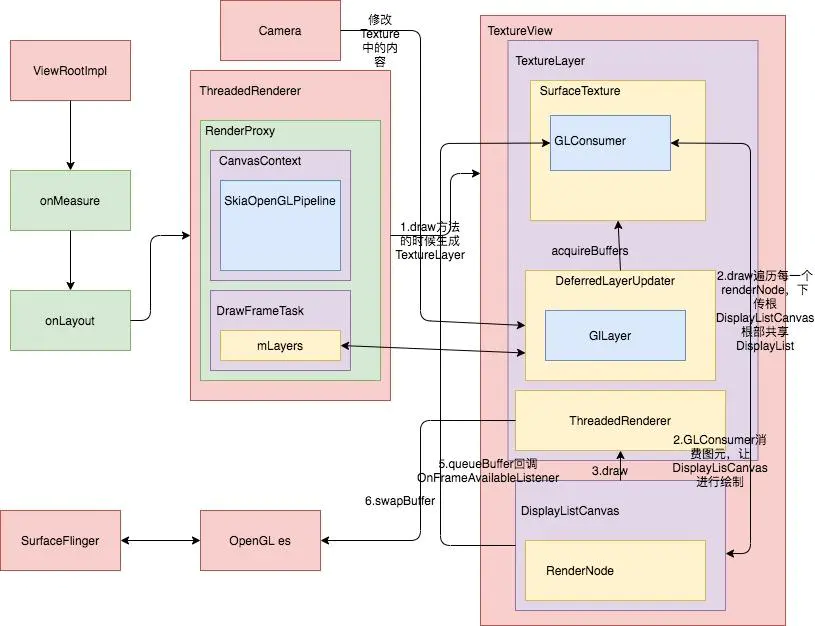

核心原理图

关键角色

总结一下,里面有几个十分关键的角色:

- 1.ThreadedRenderer 是硬件渲染的入口点,里面包含了所有硬件渲染的根RenderNode,以及一个根DisplayListCanvas。虽然每一个View一般都会包含自己的DisplayListCanvas。之所以存在一个根是为了公用一个DisplayListCanvas中的DisplayList。

- 2.CanvasContext 硬件渲染的上下文,根据当前模式选择合适的硬件渲染管道。而管道就是真正执行具体模式的绘制行为。

- 3.DrawFrameTask 保存所有的App中硬件渲染的Layer。当然这个Layer要和SF进程的Layer要区分,不是一个东西。在TextureView中是指DeferredLayerUpdater,真正执行具体行为的是GlLayer。

- 4.TextureLayer 在TextureView中承担一个TextureView 图层角色。其中包含了TextureView图元消费端SurfaceTexture,图层更新者DeferredLayerUpdater,以及ThreadedRenderer。

- 5.DeferredLayerUpdater 图层更新者。这相当于一个Holder,不会一开始就从内存中申请一个纹理对象。纹理对象可是很消耗内存的。因此会等到第一次draw调用之后,才会通过syncFrame的方法从CanvasContext生成GlLayer。GlLayer则是真正的控制图层,而这个图层实际上就是一个OpenGL es的纹理对象。

- 6.RenderNode 每一个View在硬件渲染对应的每一个节点。

- 7.DisplayListCanvas 通过RenderNode生成的一个画板。所有的像素都会画上去,并且可以和来自父View中DisplayListCanvas的DisplayList进行合并。

流程总结

graph LR

subgraph firstFrame

draw-->createTextureLayer,GLComsumer-->syncFrameState-->createGLLayer

end

createGLLayer-->|invalid|DrawLayer("LayerDrawable.DrawLayer")

subgraph secondFrame

DrawLayer-->|drawIntoDisplayListCanvas|CanvasContext.draw-->swapBuffers

end

上面那个例子TextureView之所以可以正常的运作,是因为把SurfaceTexture设置到Camera中了。让Camera在背后操作图元消费者SurfaceTexture。

重新梳理一次流程。

- 1.当我们把Camera都设置了TextureView之后,经过第一次的draw之后,将会创建一个TextureLayer,GLConsumer。此时draw的遍历结束,就会执行ThreadedRenderer的syncFrameState方法,生成真正的GlLayer,并且调用invalid进行下一轮的绘制。

- 2.进入到下一轮的绘制之后,将会继续调用DisplayListCanvas的drawTextureLayer,进行LayerDrawable.DrawLayer的方法调用,把像素绘制到DisplayListCanvas中,最后调用CanvasContext的draw的方法,并且通过其中的swapBuffers把图元发送的SF进程。

换句话说,我们在TextureView中不断的更新纹理的内容,而纹理的绘制和发送却依赖ViewRootImpl发送的draw信号。因此我们没有办法看到像软件渲染那样有lockCanvas和unlockCanvasAndPost的方法那样在绘制前后有一个明显的dequeueBuffer和queueBuffer的操作。如果阅读过我写的OpenGL es软件渲染一文,就能明白其实swapBuffer方法里面本身就带着dequeueBuffer和queueBuffer的方法。