BitmapSource

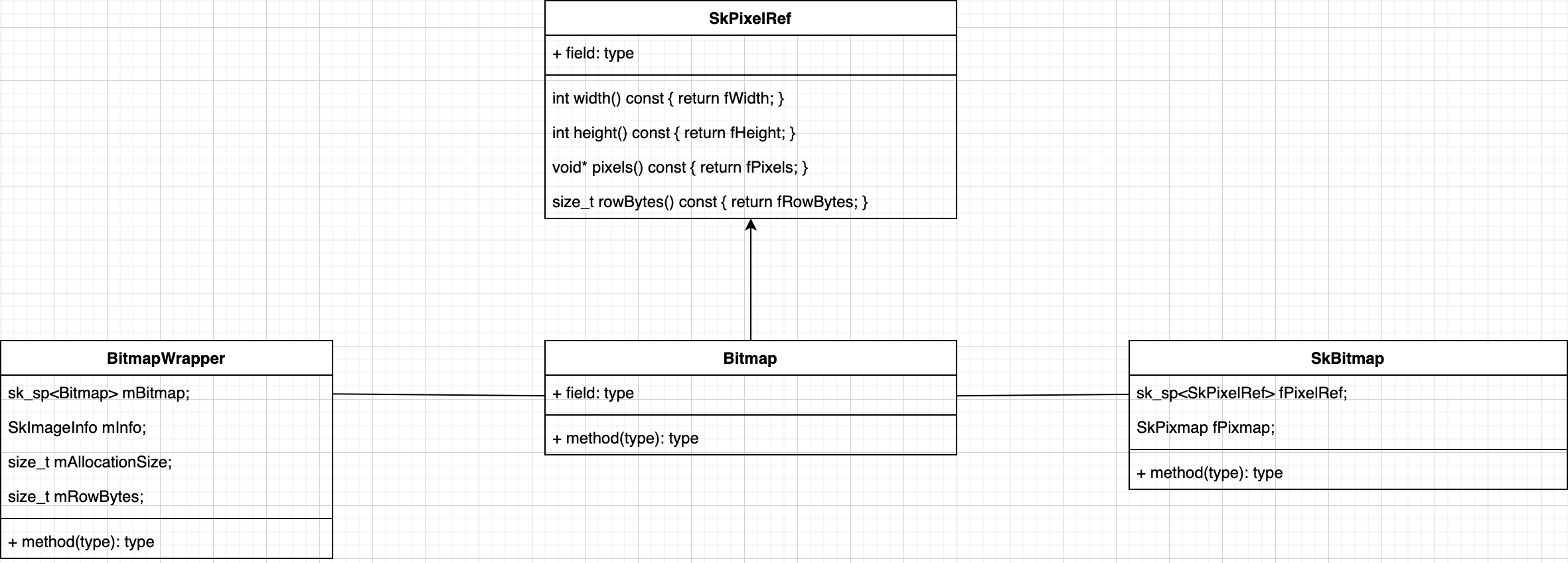

类设计

NativeAllocationRegistry procedure

ART reclaim NativeAllocationRegistry procedure(only object which will be reclaim(GC not reachable) would be enqueued)

graph TB

ReferenceQueueDaemon.runInernal-->ReferenceQueue.enqueuePending

ReferenceQueue.enqueuePending-->ReferenceQueue.enqueueLocked

ReferenceQueue.enqueueLocked-->Cleaner.clean

Cleaner.clean-->CleanerChunk.run

CleanerChunk.run-->NativeAllocationRegistry.applyFreeFunction

ImageDecoder

decodeDrawable

public static Drawable decodeDrawable(@NonNull Source src,

@NonNull OnHeaderDecodedListener listener) throws IOException {

return decodeDrawableImpl(src, listener);

}

decodeDrawableImpl

private static Drawable decodeDrawableImpl(@NonNull Source src,

@Nullable OnHeaderDecodedListener listener) throws IOException {

ImageDecoder decoder = src.createImageDecoder()

decoder.mSource = src;

decoder.callHeaderDecoded(listener, src);

Bitmap bm = decoder.decodeBitmapInternal();

return new BitmapDrawable(res, bm);

}

decodeBitmapInternal

private Bitmap decodeBitmapInternal() throws IOException {

checkState();

return nDecodeBitmap(mNativePtr, this, mPostProcessor != null,

mDesiredWidth, mDesiredHeight, mCropRect,

mMutable, mAllocator, mUnpremultipliedRequired,

mConserveMemory, mDecodeAsAlphaMask, mDesiredColorSpace);

}

Source

frameworks/base/core/jni/android/graphics/ImageDecoder.cpp

ImageDecoder.cpp

ImageDecoder_nDecodeBitmap

static jobject ImageDecoder_nDecodeBitmap(JNIEnv* env, jobject /*clazz*/, jlong nativePtr,

jobject jdecoder, jboolean jpostProcess,

jint desiredWidth, jint desiredHeight, jobject jsubset,

jboolean requireMutable, jint allocator,

jboolean requireUnpremul, jboolean preferRamOverQuality,

jboolean asAlphaMask, jobject jcolorSpace) {

......

SkBitmap bm;

auto bitmapInfo = decodeInfo;

if (asAlphaMask && colorType == kGray_8_SkColorType) {

bitmapInfo = bitmapInfo.makeColorType(kAlpha_8_SkColorType);

}

if (!bm.setInfo(bitmapInfo)) {

doThrowIOE(env, "Failed to setInfo properly");

return nullptr;

}

sk_sp<Bitmap> nativeBitmap;

// If we are going to scale or subset, we will create a new bitmap later on,

// so use the heap for the temporary.

// FIXME: Use scanline decoding on only a couple lines to save memory. b/70709380.

if (allocator == ImageDecoder::kSharedMemory_Allocator && !scale && !jsubset) {

nativeBitmap = Bitmap::allocateAshmemBitmap(&bm);

} else {

nativeBitmap = Bitmap::allocateHeapBitmap(&bm);//nativeBitmap和bm都被赋值完毕

}

......

return bitmap::createBitmap(env, nativeBitmap.release(), bitmapCreateFlags, ninePatchChunk,

ninePatchInsets);

}

Bitmap.java

android/graphics/Bitmap.java

createBitmap

public static Bitmap createBitmap(@Nullable DisplayMetrics display, int width, int height,

@NonNull Config config, boolean hasAlpha, @NonNull ColorSpace colorSpace) {

bm = nativeCreate(null, 0, width, width, height, config.nativeInt, true, null, null);

return bm;

BitmapCons()

/**

* Private constructor that must received an already allocated native bitmap

* int (pointer).

*/

// called from JNI

Bitmap(long nativeBitmap, int width, int height, int density,

boolean isMutable, boolean requestPremultiplied,

byte[] ninePatchChunk, NinePatch.InsetStruct ninePatchInsets) {

mWidth = width;

mHeight = height;

mIsMutable = isMutable;

mRequestPremultiplied = requestPremultiplied;

mNativePtr = nativeBitmap;

long nativeSize = NATIVE_ALLOCATION_SIZE + getAllocationByteCount();

NativeAllocationRegistry registry = new NativeAllocationRegistry(

Bitmap.class.getClassLoader(), nativeGetNativeFinalizer(), nativeSize);

registry.registerNativeAllocation(this, nativeBitmap);

}

frameworks/base/core/jni/android/graphics/

Bitmap.cpp(graphics)

gBitmapMethods

nativeCreate –> Bitmap_creator

static const JNINativeMethod gBitmapMethods[] = {

{ "nativeCreate", "([IIIIIIZ[FLandroid/graphics/ColorSpace$Rgb$TransferParameters;)Landroid/graphics/Bitmap;",

(void*)Bitmap_creator },

{ "nativeCopy", "(JIZ)Landroid/graphics/Bitmap;",

(void*)Bitmap_copy },

{ "nativeCopyAshmem", "(J)Landroid/graphics/Bitmap;",

(void*)Bitmap_copyAshmem },

{ "nativeCopyAshmemConfig", "(JI)Landroid/graphics/Bitmap;",

(void*)Bitmap_copyAshmemConfig },

{ "nativeGetNativeFinalizer", "()J", (void*)Bitmap_getNativeFinalizer },

{ "nativeRecycle", "(J)Z", (void*)Bitmap_recycle },

{ "nativeReconfigure", "(JIIIZ)V", (void*)Bitmap_reconfigure },

{ "nativeCompress", "(JIILjava/io/OutputStream;[B)Z",

(void*)Bitmap_compress },

{ "nativeErase", "(JI)V", (void*)Bitmap_erase },

{ "nativeRowBytes", "(J)I", (void*)Bitmap_rowBytes },

{ "nativeGetPixel", "(JII)I", (void*)Bitmap_getPixel },

{ "nativeGetPixels", "(J[IIIIIII)V", (void*)Bitmap_getPixels },

{ "nativeSetPixel", "(JIII)V", (void*)Bitmap_setPixel },

{ "nativeSetPixels", "(J[IIIIIII)V", (void*)Bitmap_setPixels },

{ "nativeCopyPixelsToBuffer", "(JLjava/nio/Buffer;)V",

(void*)Bitmap_copyPixelsToBuffer },

{ "nativeCopyPixelsFromBuffer", "(JLjava/nio/Buffer;)V",

(void*)Bitmap_copyPixelsFromBuffer },

};

Bitmap_creator

static jobject Bitmap_creator(JNIEnv* env, jobject, jintArray jColors,

jint offset, jint stride, jint width, jint height,

jint configHandle, jboolean isMutable,

jfloatArray xyzD50, jobject transferParameters) {

SkBitmap bitmap;

sk_sp<Bitmap> nativeBitmap = Bitmap::allocateHeapBitmap(&bitmap);

if (!nativeBitmap) {

ALOGE("OOM allocating Bitmap with dimensions %i x %i", width, height);

doThrowOOME(env);

return NULL;

}

if (jColors != NULL) {

GraphicsJNI::SetPixels(env, jColors, offset, stride, 0, 0, width, height, bitmap);

}

return createBitmap(env, nativeBitmap.release(), getPremulBitmapCreateFlags(isMutable));

bitmap::createBitmap

jobject createBitmap(JNIEnv* env, Bitmap* bitmap,

int bitmapCreateFlags, jbyteArray ninePatchChunk, jobject ninePatchInsets,

int density) {

bool isMutable = bitmapCreateFlags & kBitmapCreateFlag_Mutable;

bool isPremultiplied = bitmapCreateFlags & kBitmapCreateFlag_Premultiplied;

// The caller needs to have already set the alpha type properly, so the

// native SkBitmap stays in sync with the Java Bitmap.

BitmapWrapper* bitmapWrapper = new BitmapWrapper(bitmap);

jobject obj = env->NewObject(gBitmap_class, gBitmap_constructorMethodID,

reinterpret_cast<jlong>(bitmapWrapper), bitmap->width(), bitmap->height(), density,

isMutable, isPremultiplied, ninePatchChunk, ninePatchInsets);

return obj;

}

Bitmap_getNativeFinalizer

static jlong Bitmap_getNativeFinalizer(JNIEnv*, jobject) {

return static_cast<jlong>(reinterpret_cast<uintptr_t>(&Bitmap_destruct));

}

Bitmap_destruct

static void Bitmap_destruct(BitmapWrapper* bitmap) {

delete bitmap;

}

libcore/luni/src/main/java/libcore/util

NativeAllocationRegistry.java

/**

* A NativeAllocationRegistry is used to associate native allocations with

* Java objects and register them with the runtime.

* There are two primary benefits of registering native allocations associated

* with Java objects:

* <ol>

* <li>The runtime will account for the native allocations when scheduling

* garbage collection to run.</li>

* <li>The runtime will arrange for the native allocation to be automatically

* freed by a user-supplied function when the associated Java object becomes

* unreachable.</li>

* </ol>

* A separate NativeAllocationRegistry should be instantiated for each kind

* of native allocation, where the kind of a native allocation consists of the

* native function used to free the allocation and the estimated size of the

* allocation. Once a NativeAllocationRegistry is instantiated, it can be

* used to register any number of native allocations of that kind.

* @hide

*/

public class NativeAllocationRegistry {

public NativeAllocationRegistry(ClassLoader classLoader, long freeFunction, long size) {

this.classLoader = classLoader;

this.freeFunction = freeFunction;

this.size = size;

}

}

registerNativeAllocation

/**

* Registers a new native allocation and associated Java object with the

* runtime.

* This NativeAllocationRegistry's <code>freeFunction</code> will

* automatically be called with <code>nativePtr</code> as its sole

* argument when <code>referent</code> becomes unreachable. If you

* maintain copies of <code>nativePtr</code> outside

* <code>referent</code>, you must not access these after

* <code>referent</code> becomes unreachable, because they may be dangling

* pointers.

* <p>

* The returned Runnable can be used to free the native allocation before

* <code>referent</code> becomes unreachable. The runnable will have no

* effect if the native allocation has already been freed by the runtime

* or by using the runnable.

* <p>

* WARNING: This unconditionally takes ownership, i.e. deallocation

* responsibility of nativePtr. nativePtr will be DEALLOCATED IMMEDIATELY

* if the registration attempt throws an exception (other than one reporting

* a programming error).

*

* @param referent Non-null java object to associate the native allocation with

* @param nativePtr Non-zero address of the native allocation

* @return runnable to explicitly free native allocation

* @throws IllegalArgumentException if either referent or nativePtr is null.

* @throws OutOfMemoryError if there is not enough space on the Java heap

* in which to register the allocation. In this

* case, <code>freeFunction</code> will be

* called with <code>nativePtr</code> as its

* argument before the OutOfMemoryError is

* thrown.

*/

public Runnable registerNativeAllocation(Object referent, long nativePtr) {

if (referent == null) {

throw new IllegalArgumentException("referent is null");

}

if (nativePtr == 0) {

throw new IllegalArgumentException("nativePtr is null");

}

CleanerThunk thunk;

CleanerRunner result;

try {

thunk = new CleanerThunk();

Cleaner cleaner = Cleaner.create(referent, thunk);

result = new CleanerRunner(cleaner);

registerNativeAllocation(this.size);

} catch (VirtualMachineError vme /* probably OutOfMemoryError */) {

applyFreeFunction(freeFunction, nativePtr);

throw vme;

} // Other exceptions are impossible.

// Enable the cleaner only after we can no longer throw anything, including OOME.

thunk.setNativePtr(nativePtr);

return result;

}

CleanerThunk

private class CleanerThunk implements Runnable {

private long nativePtr;

public CleanerThunk() {

this.nativePtr = 0;

}

public void run() {

if (nativePtr != 0) {

applyFreeFunction(freeFunction, nativePtr);

registerNativeFree(size);

}

}

public void setNativePtr(long nativePtr) {

this.nativePtr = nativePtr;

}

}

applyFreeFunction

/**

* Calls <code>freeFunction</code>(<code>nativePtr</code>).

* Provided as a convenience in the case where you wish to manually free a

* native allocation using a <code>freeFunction</code> without using a

* NativeAllocationRegistry.

*/

public static native void applyFreeFunction(long freeFunction, long nativePtr);

registerNativeFree

private static void registerNativeFree(long size) {

VMRuntime.getRuntime().registerNativeFree((int)Math.min(size, Integer.MAX_VALUE));

}

registerNativeAllocation

private static void registerNativeAllocation(long size) {

VMRuntime.getRuntime().registerNativeAllocation((int)Math.min(size,Integer.MAX_VALUE));

}

CleanerRunner

private static class CleanerRunner implements Runnable {

private final Cleaner cleaner;

public CleanerRunner(Cleaner cleaner) {

this.cleaner = cleaner;

}

public void run() {

cleaner.clean();

}

}

libcore/ojluni/src/main/java/sun/misc/Cleaner.java

Cleaner

create

public class Cleaner

extends PhantomReference<Object>

{

/**

* Creates a new cleaner.

*

* @param ob the referent object to be cleaned

* @param thunk

* The cleanup code to be run when the cleaner is invoked. The

* cleanup code is run directly from the reference-handler thread,

* so it should be as simple and straightforward as possible.

*

* @return The new cleaner

*/

public static Cleaner create(Object ob, Runnable thunk) {

if (thunk == null)

return null;

return add(new Cleaner(ob, thunk));

}

add

private static synchronized Cleaner add(Cleaner cl) {

if (first != null) {

cl.next = first;

first.prev = cl;//双向链表,插入表头

}

first = cl;

return cl;

}

clean

/**

* Runs this cleaner, if it has not been run before.

*/

public void clean() {

if (!remove(this))

return;

try {

thunk.run();

}

}

libcore/libart/src/main/java/dalvik/system/

VMRuntime.java

registerNativeAllocation

/**

* Registers a native allocation so that the heap knows about it and performs GC as required.

* If the number of native allocated bytes exceeds the native allocation watermark, the

* function requests a concurrent GC. If the native bytes allocated exceeds a second higher

* watermark, it is determined that the application is registering native allocations at an

* unusually high rate and a GC is performed inside of the function to prevent memory usage

* from excessively increasing. Memory allocated via system malloc() should not be included

* in this count. The argument must be the same as that later passed to registerNativeFree(),

* but may otherwise be approximate.

*/

@UnsupportedAppUsage

@libcore.api.CorePlatformApi

public native void registerNativeAllocation(long bytes);

frameworks/base/libs/hwui/hwui/Bitmap.cpp

Bitmap.cpp(hwui)

class ANDROID_API Bitmap : public SkPixelRef {}

AllocPixelRef function

typedef sk_sp<Bitmap> (*AllocPixelRef)(size_t allocSize, const SkImageInfo& info, size_t rowBytes);

allocateHeapBitmap

sk_sp<Bitmap> Bitmap::allocateHeapBitmap(SkBitmap* bitmap) {

return allocateBitmap(bitmap, &android::allocateHeapBitmap);

}

allocateBitmap

static sk_sp<Bitmap> allocateBitmap(SkBitmap* bitmap, AllocPixelRef alloc) {

const SkImageInfo& info = bitmap->info();

// we must respect the rowBytes value already set on the bitmap instead of

// attempting to compute our own.

const size_t rowBytes = bitmap->rowBytes();

if (!computeAllocationSize(rowBytes, bitmap->height(), &size)) {

return nullptr;

}

auto wrapper = alloc(size, info, rowBytes);

if (wrapper) {

wrapper->getSkBitmap(bitmap);

}

return wrapper;

android::allocateHeapBitmap

static sk_sp<Bitmap> allocateHeapBitmap(size_t size, const SkImageInfo& info, size_t rowBytes) {

void* addr = calloc(size, 1);//申请bitmap内存空间,单位bytes,默认初始化为0

if (!addr) {

return nullptr;

}

return sk_sp<Bitmap>(new Bitmap(addr, size, info, rowBytes));

}

getSkBitmap

void Bitmap::getSkBitmap(SkBitmap* outBitmap) {

outBitmap->setHasHardwareMipMap(mHasHardwareMipMap);

if (isHardware()) {

if (uirenderer::Properties::isSkiaEnabled()) {

outBitmap->allocPixels(SkImageInfo::Make(info().width(), info().height(),

info().colorType(), info().alphaType(),

nullptr));

} else {

outBitmap->allocPixels(info());

}

uirenderer::renderthread::RenderProxy::copyGraphicBufferInto(graphicBuffer(), outBitmap);

if (mInfo.colorSpace()) {

sk_sp<SkPixelRef> pixelRef = sk_ref_sp(outBitmap->pixelRef());

outBitmap->setInfo(mInfo);

outBitmap->setPixelRef(std::move(pixelRef), 0, 0);

}

return;

}

outBitmap->setInfo(mInfo, rowBytes());

outBitmap->setPixelRef(sk_ref_sp(this), 0, 0);

}

allocateAshmemBitmap

sk_sp<Bitmap> Bitmap::allocateAshmemBitmap(SkBitmap* bitmap) {

return allocateBitmap(bitmap, &Bitmap::allocateAshmemBitmap);

}

allocateAshmemBitmap

sk_sp<Bitmap> Bitmap::allocateAshmemBitmap(size_t size, const SkImageInfo& info, size_t rowBytes) {

// Create new ashmem region with read/write priv

int fd = ashmem_create_region("bitmap", size);

if (fd < 0) {

return nullptr;

}

void* addr = mmap(NULL, size, PROT_READ | PROT_WRITE, MAP_SHARED, fd, 0);

if (addr == MAP_FAILED) {

close(fd);

return nullptr;

}

if (ashmem_set_prot_region(fd, PROT_READ) < 0) {

munmap(addr, size);

close(fd);

return nullptr;

}

return sk_sp<Bitmap>(new Bitmap(addr, fd, size, info, rowBytes));

}

external/skia/src/core/

SkBitmap.cpp

setInfo

bool SkBitmap::setInfo(const SkImageInfo& info, size_t rowBytes) {

fPixelRef = nullptr; // Free pixels.

fPixmap.reset(info.makeAlphaType(newAT), nullptr, SkToU32(rowBytes));

return true;

setPixelRef

void SkBitmap::setPixelRef(sk_sp<SkPixelRef> pr, int dx, int dy) {

fPixelRef = kUnknown_SkColorType != this->colorType() ? std::move(pr) : nullptr;

void* p = nullptr;

size_t rowBytes = this->rowBytes();

// ignore dx,dy if there is no pixelref

if (fPixelRef) {

rowBytes = fPixelRef->rowBytes();

// TODO(reed): Enforce that PixelRefs must have non-null pixels.

p = fPixelRef->pixels();

if (p) {

p = (char*)p + dy * rowBytes + dx * this->bytesPerPixel();

}

}

SkPixmapPriv::ResetPixmapKeepInfo(&fPixmap, p, rowBytes);

external/skia/include/core/SkPixelRef.h

SkPixelRef

pixels

void* pixels() const { return fPixels; }

size_t rowBytes() const { return fRowBytes; }

external/skia/include/core/

SkImageInfo.h

makeAlphaType

SkImageInfo makeAlphaType(SkAlphaType newAlphaType) const {

return Make(fWidth, fHeight, fColorType, newAlphaType, fColorSpace);

}

external/skia/src/core/

SkPixmap

reset

void SkPixmap::reset(const SkImageInfo& info, const void* addr, size_t rowBytes) {

fPixels = addr;

fRowBytes = rowBytes;

fInfo = info;

}