Graphics

Graphics

https://source.android.com/devices/graphics/index.html

Android graphics components

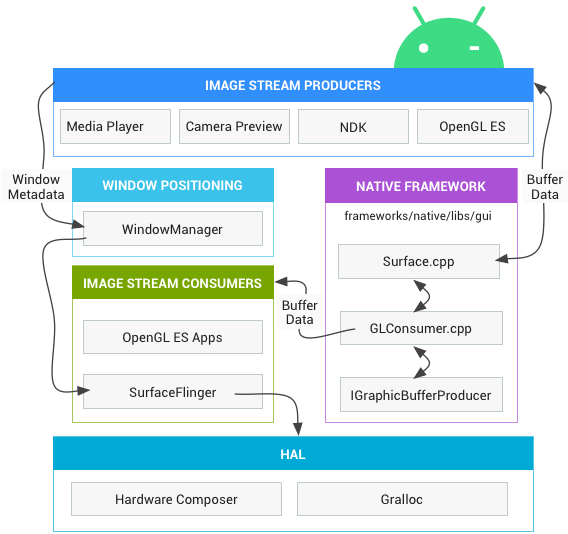

No matter what rendering API developers use, everything is rendered onto a “surface.” The surface represents the producer side of a buffer queue that is often consumed by SurfaceFlinger. Every window that is created on the Android platform is backed by a surface. All of the visible surfaces rendered are composited onto the display by SurfaceFlinger.

The following diagram shows how the key components work together:

Figure 1. How surfaces are rendered

The main components are described below:

Image Stream Producers

An image stream producer can be anything that produces graphic buffers for consumption. Examples include OpenGL ES, Canvas 2D, and mediaserver video decoders.

Figure 2. Graphic data flow through Android

OpenGL ES

OpenGL for Embedded Systems (OpenGL ES or GLES) is a subset[2] of the OpenGL computer graphics rendering application programming interface (API) for rendering 2D and 3D computer graphics such as those used by video games, typically hardware-accelerated using a graphics processing unit (GPU). It is designed for embedded systems like smartphones, tablet computers, video game consoles and PDAs. OpenGL ES is the “most widely deployed 3D graphics API in history”.[3]

The API is cross-language and multi-platform. The libraries GLUT and GLU are not available for OpenGL ES. OpenGL ES is managed by the non-profit technology consortium Khronos Group. Vulkan, a next-generation API from Khronos, is made for simpler high performance drivers for mobile and desktop devices.[4]

Vulkan

Application developers use Vulkan to create apps that execute commands on the GPU with significantly reduced overhead. Vulkan also provides a more direct mapping to the capabilities found in current graphics hardware compared to EGL and GLES, minimizing opportunities for driver bugs and reducing developer testing time.

Layers-Displays

https://source.android.com/devices/graphics/layers-displays

Layers

A Layer is the most important unit of composition. A layer is a combination of a surface and an instance of SurfaceControl. Each layer has a set of properties that define how it interacts with other layers. Layer properties are described in the table below.

| Property | Description |

|---|---|

| Positional | Defines where the layer appears on its display. Includes information such as the positions of a layer’s edges and its Z order relative to other layers (whether it should be in front of or behind other layers). |

| Content | Defines how content displayed on the layer should be presented within the bounds defined by the positional properties. Includes information such as crop (to expand a portion of the content to fill the bounds of the layer) and transform (to show rotated or flipped content). |

| Composition | Defines how the layer should be composited with other layers. Includes information such as blending mode and a layer-wide alpha value for alpha compositing. |

| Optimization | Provides information not strictly necessary to correctly composite the layer, but that can be used by the Hardware Composer (HWC) device to optimize how it performs composition. Includes information such as the visible region of the layer and which portion of the layer has been updated since the previous frame. |

Displays

graph LR

SurfaceFlinger-->internalDisplay

SurfaceFlinger-->externalDisplays

SurfaceFlinger-->virtualDisplays

A display is another important unit of composition. A system can have multiple displays and displays can be added or removed during normal system operations. Displays are added/removed at the request of the HWC or at the request of the framework. The HWC device requests displays be added or removed when an external display is connected or disconnected from the device, which is called hotplugging. Clients request virtual displays, whose contents are rendered into an off-screen buffer instead of to a physical display.

Virtual displays

surfaceflinger supports an internal display (built into the phone or tablet), external displays (such as a television connected through hdmi), and one or more virtual displays that make composited output available within the system. virtual displays can be used to record the screen or send the screen over a network. frames generated for a virtual display are written to a bufferqueue.

Virtual displays may share the same set of layers as the main display (the layer stack) or have their own set. There’s no VSYNC for a virtual display, so the VSYNC for the internal display triggers composition for all displays.

On HWC implementations that support them, virtual displays can be composited with OpenGL ES (GLES), HWC, or both GLES and HWC. On nonsupporting implementations, virtual displays are always composited using GLES.

The WindowManager can ask SurfaceFlinger to create a visible layer for which SurfaceFlinger acts as the BufferQueue consumer. It’s also possible to ask SurfaceFlinger to create a virtual display, for which SurfaceFlinger acts as the BufferQueue producer.

Vsync

https://source.android.com/devices/graphics/implement-vsync

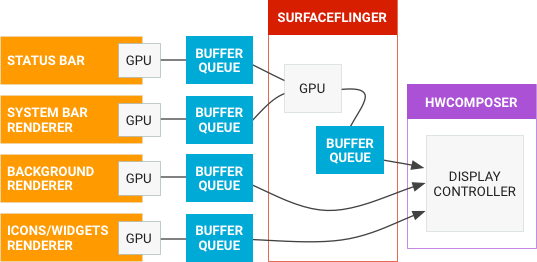

The VSYNC signal synchronizes the display pipeline. The display pipeline consists of app rendering, SurfaceFlinger composition, and the Hardware Composer (HWC) presenting images on the display. VSYNC synchronizes the time apps wake up to start rendering, the time SurfaceFlinger wakes up to composite the screen, and the display refresh cycle. This synchronization eliminates stutter and improves the visual performance of graphics.

The HWC generates VSYNC events and sends the events to SurfaceFlinger through the callback:

typedef void (*HWC2_PFN_VSYNC)(hwc2_callback_data_t callbackData,

hwc2_display_t display, int64_t timestamp);

SurfaceFlinger controls whether or not the HWC generates VSYNC events by calling to setVsyncEnabled. SurfaceFlinger enables setVsyncEnabled to generate VSYNC events so it can synchronize with the refresh cycle of the display. When SurfaceFlinger is synchronized to the display refresh cycle, SurfaceFlinger disables setVsyncEnabled to stop the HWC from generating VSYNC events. If SurfaceFlinger detects a difference between the actual VSYNC and the VSYNC it previously established SurfaceFlinger re-enables VSYNC event generation.

VSYNC offset

The sync app and SurfaceFlinger render loops to the hardware VSYNC. On a VSYNC event, the display begins showing frame N while SurfaceFlinger begins compositing windows for frame N+1. The app handles pending input and generates frame N+2.

Synchronizing with VSYNC delivers consistent latency. It reduces errors in apps and SurfaceFlinger and minimizes displays drifting in and out of phase with each other. This, assumes app and SurfaceFlinger per-frame times don’t vary widely. The latency is at least two frames.

To remedy this, you can employ VSYNC offsets to reduce the input-to-display latency by making app and composition signal relative to hardware VSYNC. This is possible because app plus composition usually takes less than 33 ms.

The result of VSYNC offset is three signals with same period and offset phase:

HW_VSYNC_0— Display begins showing next frame.VSYNC— App reads input and generates next frame.SF_VSYNC— SurfaceFlinger begins compositing for next frame.

With VSYNC offset, SurfaceFlinger receives the buffer and composites the frame while the app simultaneously processes the input and renders the frame.

Note: VSYNC offsets reduce the time available for app and composition, providing a greater chance for error.

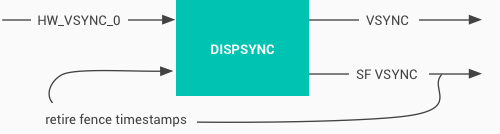

DispSync

DispSync maintains a model of the periodic hardware-based VSYNC events of a display and uses that model to execute callbacks at specific phase offsets from the hardware VSYNC events.

DispSync is a software phase-lock loop (PLL) that generates the VSYNC and SF_VSYNC signals used by Choreographer and SurfaceFlinger, even if not offset from hardware VSYNC.

Figure 1. DispSync flow